Last week, I spent the day with the Big Lottery Fund and a bunch of evaluators who are running five large evaluations of major BLF programmes. The evaluators come together regularly to exchange and learn from each other’s experiences. This meeting was focusing on the topic of evaluation and complexity. I was asked by BLF to set the scene by talking about what complexity is and why it is relevant for evaluation. Afterwards, I enjoyed listening to the evaluators on how they made sense of complexity and the consequences for their evaluations. Here a summary of my inputs and some insights from the subsequent discussion.

Why is understanding complexity important for evaluation?

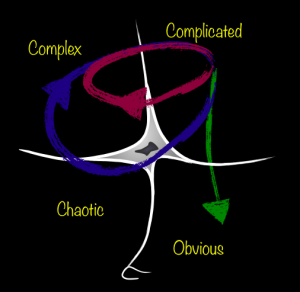

Or maybe better to start with the question: “When is understanding complexity important for evaluation?” Not everything is complex and using a complexity sensitive strategy in an ordered context is as little helpful as using a strategy from an ordered context in a complex situation. So I started with introducing different types of systems (ordered, complex, chaotic) and different strategies to employ in these situations. I introduced Dave Snowden’s Cynefin framework (picture), which is a brilliant framework to make sense of what is complex and what is not – it differentiates between five contexts or ‘domains’. The participants used the Cynefin framework to make sense of what aspects of their own evaluations are complex and which are not.

Or maybe better to start with the question: “When is understanding complexity important for evaluation?” Not everything is complex and using a complexity sensitive strategy in an ordered context is as little helpful as using a strategy from an ordered context in a complex situation. So I started with introducing different types of systems (ordered, complex, chaotic) and different strategies to employ in these situations. I introduced Dave Snowden’s Cynefin framework (picture), which is a brilliant framework to make sense of what is complex and what is not – it differentiates between five contexts or ‘domains’. The participants used the Cynefin framework to make sense of what aspects of their own evaluations are complex and which are not.

Once we figured out that some central aspects of the evaluations are indeed complex, what then? First we need to better understand complexity. So I talked about complex adaptive systems (CAS) and their characteristics. CAS are made up of a large number of actors who continuously interact with each other. Through all the rich interactions, a network of interdependencies is built, out of which, over time, an overarching structure emerges, which gives the system a certain disposition and propensity for change. This structure is dynamically built and continuously updated through the interactions among the individual actors and cannot be reduced to the behaviour of individuals.

While we can understand the disposition of a complex system, we cannot predict how the future unfolds. So for an activity or intervention, we cannot predict exactly what results it will produce – the corollary being we cannot design an intervention to produce a pre-defined set of results. So the traditional and ordered approach evaluate the performance of an intervention against a pre-defined outcome cannot work. Rather than to pre-define desired outcomes, the strategy in complex systems is to try different things to stimulate change and then further amplify beneficial change (which we recognise once we see it and which can be different what we would have been able to imagine) while dampening negative change. So the role of the evaluation must be to help a change initiative understand what change was stimulated by its probes and in the end make a summative statement about whether the initiative has successfully stimulated and amplified (or stabilised) positive change.

Again, not all aspects of the initiatives that are evaluated are complex. In the case of the ‘Fulfilling Lives: A Better Start’ programme, which looks at early childhood development, there is a fairly clear understanding of what children need in their young ages in order to experience better lives (things like healthy nutrition, attachment and care from their parents, the ability to make their own experiences and learn, etc.). This causality, hence, is a complicated aspect of childhood development, not a complex one. It is repeatable and arguably holds true in different contexts. This means that the evaluation can use traditional methods like comparison between intervention and non-intervention areas to test whether these outcomes are achieved in the intervention area as a result of the programme.

In contrast, changing the system of service provision in the chosen intervention areas of the programme to make these things available to children is certainly complex. We don’t exactly know how to approach that and how the end result will look like – how the services need to be designed to function effectively. This depends on the current level and quality of service provision in each area, the area’s history, and many other aspects like social cohesion, levels of trust, etc. Different intervention areas might end up with service delivery systems that look differently and will also take different paths to get there – or might not get there at all. Consequently, the evaluations need to take a different approach as in the example above. They need to evolve together with the programme and support it in its learning effort of what works in which context.

What are the implications for the five evaluations?

After my input, we heard presentations from two of the five BLF evaluations about how they make sense of complexity. None of the five evaluations were explicitly designed with complexity in mind so these presentations were an attempt to retrospectively assess the evaluation design and understand in how far they respond to the challenges of complexity.

Both evaluations that were presented shared a number of ways to respond to the challenges of complexity, which, even though not explicitly named ‘challenges of complexity’ where still challenges the researchers were aware of when designing the evaluation.

- Both evaluations are using a mixed methods approach combining qualitative and quantitative data collection – they are understanding statistically what influences what but also gain a better understanding of the context and the ‘why/how’ behind causal connections – so they can say for example what works differently in different regions and potentially why.

- Both evaluations employ participatory methods, partly even in the design of the evaluation methods themselves. This ensures that they are sensitive to the context and can capture the changes deemed important by the target population – not the researchers of BLF.

- Both evaluations try to ‘layer’ different theories in order to capture different possible perspectives on the problems at hand.

- Both take a long-term approach, follow cohorts but also do cross-cutting studies at particular points in time. This ensures that they capture change that takes longer to emerge, which is typical when looking at social change.

- Both evaluations have built in learning and communications strategies to engage from early on and continuously with the people implementing the change initiatives on the ground, supporting them to adopt a learning approach and enable them to change their implementation strategies based on what works and what does not.

In the discussion after the presentations one point stood out in particular. Evaluations in complexity need to take different perspectives, use different methods and gather different types of data. There is no one ‘test’ that can with finality say ‘yes it worked and this is how’. So the evaluations need to create a ‘preponderance of evidence’ – show that it is more likely than not that the facts presented are true. Or, as one of the presenters called it: the data needs to built levels of Swiss cheese layered on top of each other until there are no holes anymore that cut through the pile. This is also important to be able to accurately and convincingly communicate the results of the evaluation to different audiences. Some audiences are more convinced by ‘hard data’, i.e. numbers and statistical tests. Others are more amenable to ‘soft data’ like case studies or stories about individual experiences. Furthermore, the collection of diverse data also ensures that we can say something about effects even if the interventions and potentially the objectives changed over time – which they tend to in complex settings over long timeframes.

It was interesting to see that, even though the participants said they have note really come in touch with complexity thinking, they show an intuitive understanding of many of the insights and have designed their evaluations accordingly – at least to some extent. Understanding complexity and the implications from the beginning, though, could help further improve evaluation design, manage expectations and improve use of the evaluation results. It could also prevent that, as one participant expressed it, the evaluations are designed for the ordered space but then sooner or later descent into chaos.

Here are the slides I used for the presentation of complexity.

Pingback: From the feed: More complexity – Chris Corrigan